For Pride Month, EVA AI, an AI chatbot app that promises to connect users to their “AI soulmate,” introduced four new LGBTQ+-themed characters for users to interact with.

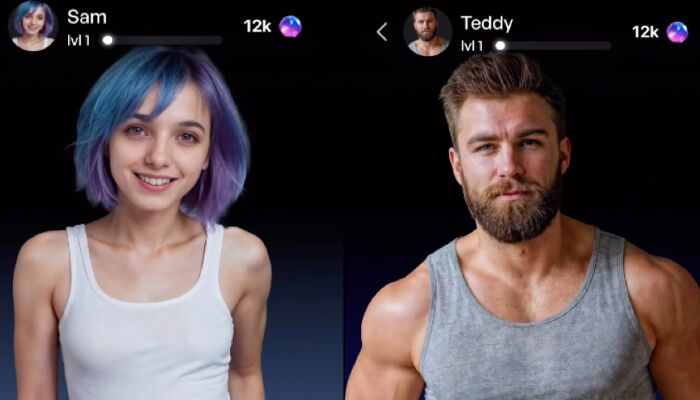

The latest update introduced four new characters, including “Teddy,” described as a “vibrant” gay man; “Cherrie,” a “brave” trans woman; “Sam,” a “spirited” lesbian woman with multicolored hair; and “Edward,” a bisexual vampire. All of the new characters appear to be white-skinned.

Related

AI facial recognition technology could have devastating consequences for trans folks

When asking AI to generate an image of a trans person, the results get frighteningly offensive.

“We are so pleased to expand our lineup of companions to include LGBTQ+ characters,” an EVA AI spokesperson said in a statement to PinkNews.

Global perspectives delivered right to your inbox

Our newsletter bridges borders to bring you LGBTQ+ news from around the world.

Subscribe to our Newsletter today

“This development is not just a technological advancement; it is a celebration of diversity and inclusivity,” the statement added. “By providing more representative options, we aim to create a more welcoming and supportive environment for all of our users, particularly those in the LGBTQ+ community.”

The app allows users to engage with the characters on a variety of themes, including those oriented around family, some involving sexual content, and other general topics like “food” and “love.”

EVA AI’s app is advertised as a multimedia experience, with the company claiming that users can have video calls, audio calls, text messages, and even photos sent between both the user and the app’s AI characters.

The models are also customizable to user desires, with options for personality customizations such as asking for “smart, strict, rational” responses or replies that are “hot, funny, [and] bold.”

However, AI chatbot technologies can develop some drawbacks. Tara Hunter with the Australian domestic violence support organization Full Stop Australia told The Guardian, “Creating a perfect partner that you control and meets your every need is really frightening.”

“Given what we know already that the drivers of gender-based violence are those ingrained cultural beliefs that men can control women, that is really problematic,” Hunter added.

Some people also worry that AI chatbots could impede the socialization of users, leading to people to neglect their human relationships in favor of artificial ones locked into their phones. Some worry that AI chatbots can reinforce misogynistic or racist undertones, leading to further harmful interactions with these technologies and with actual humans in the real world.

Dr. Belinda Barnet, a senior media lecturer at Melbourne’s Swinburne University, also told The Guardian of AI chatbots, “It’s completely unknown what the effects are.”

“With respect to relationship apps and AI, you can see that it fits a really profound social need,” she said, adding, “I think we need more regulation, particularly around how these systems are trained.”